Real llms.txt examples from leading tech companies (and what they got right)

February 7, 2026

Peri Langlois

Head of Product Marketing

Share this article

As AI agents become a primary interface for developers, documentation is increasingly read by models before humans. That shift changes what matters. Instead of high-level overviews, AI needs clear paths to concrete answers like authentication steps, deployment guides, and API references. llms.txt addresses this need by giving large language models a curated map of the most important documentation pages.

AI agents are becoming the primary way developers interact with documentation. Vercel attributes roughly 10% of new signups to ChatGPT referrals. Teams are recognizing that AI often reads their docs before a human ever clicks a link.

That shift matters most for product documentation. When developers ask an AI agent for help, they’re not looking for positioning or overviews—they want concrete answers: how to authenticate, how to deploy, which API to use, why something is breaking. If a model can’t find or prioritize the right pages, it fills in the gaps with partial context or guesswork.

llms.txt provides a solution.

Proposed in 2024 by Jeremy Howard, llms.txt is a simple Markdown file that points large language models to the most important parts of your documentation. Instead of scraping complex HTML pages, navigation menus, and sidebars, AI tools can start from a curated set of high-value entry points.

It helps to think of llms.txt alongside tools you already use:

- sitemap.xml lists everything that exists

- robots.txt controls what crawlers are allowed to access

- llms.txt signals what matters most

As AI crawlers have grown more common, practitioners have started calling llms.txt a “treasure map” for AI. Community directories now track thousands of live implementations.

In this article, we analyze real llms.txt files from developer-focused companies, including Anthropic, Vercel, Stripe, Cloudflare, and Cursor. We’ll look at how different teams structure documentation for AI discovery, what patterns consistently work, and how you can apply those lessons to your own docs.

What llms.txt actually does (a technical overview)

llms.txt is a Markdown file that points language models to your best documentation entry points so they can answer questions accurately within a limited context.

This limitation is important. Context window size isn't the constraint; focus is. Models perform better with curated information than with comprehensive dumps. It’s the same problem humans face: handing someone a 500‑page manual is rarely as effective as giving them a one‑page executive summary with references.

Most AI tools will start with your sitemap if you don’t have a llms.txt. They attempt to crawl broadly, hit rate limits, encounter navigation-heavy pages, and then either stop early or piece together answers from incomplete information.

llms.txt flips that model. You explicitly tell AI tools:

- These are the docs you should read first

- These are the concepts that matter most

- This is how the product is organized

The format is intentionally simple:

- An H1 with your product or site name

- A short blockquote describing what the product does

- H2 sections with curated lists of links

# YourProduct

> YourProduct helps developers build faster with AI-native tools

## Getting Started

- [Quickstart](https://yourproduct.com/docs/quickstart.md)

- [Installation](https://yourproduct.com/docs/install.md)

## Core Concepts

- [Architecture](https://yourproduct.com/docs/architecture.md)

- [Authentication](https://yourproduct.com/docs/auth.md)

The value comes from how you choose and order links. That ordering acts as a priority signal for models deciding what to read—and what to quote—when answering real developer questions.

7 real llms.txt examples from leading companies

The best way to understand llms.txt is to look at how companies with very different documentation challenges use it in practice.

In the examples below, we focus on:

- Organization strategy: Whether the file is structured around products, workflows, or broader hierarchies.

- Priority signaling: How ordering and labeling communicate what matters most

- Scope decisions: Whether teams publish a partial llms.txt index, a full export, or both

- Decision-making: When each pattern makes sense.

1. Anthropic: Index + full export for dense technical docs

Anthropic’s documentation is dense, conceptual, and frequently consumed through AI tools. Their llms.txt setup reflects that reality. Anthropic’s docs need to support quick, real‑time answers (“How do I call the Claude API?”) and deep ingestion by IDEs and agents that want comprehensive context.

Their structure

Anthropic publishes a slim llms.txt index that links to a much larger llms‑full.txt Markdown export containing their full documentation.

Why it works

The split serves two distinct use cases:

- The index acts as a fast map for conversational AI tools

- The full export supports IDE integrations and agent workflows that can handle large inputs

Rather than overwhelming models by default, Anthropic lets tools choose the appropriate level of depth.

When to copy this pattern

If your documentation is conceptual, AI‑native, or frequently embedded into developer tools, the index + export approach keeps ingestion predictable without sacrificing completeness.

2. Vercel: Product‑first organization for AI‑driven discovery

Vercel's llm.txt implementation demonstrates how to organize documentation for a multi-product company. Developers may arrive asking about Next.js, the AI SDK, or Blob storage. AI tools need help understanding how those pieces fit together.

Their structure

Vercel groups documentation by major product areas and consistently surfaces:

- Quickstarts

- Core concepts

- High‑intent guides

They avoid listing every page and instead focus on entry points that map to common developer questions.

title: "Account Management"

description: "Learn how to manage your Vercel account and team members."

last_updated: "2025-12-23T06:39:29.940Z"

source: "https://vercel.com/docs/accounts"

--------------------------------------------------------------------------------

# Account Management

When you first sign up for Vercel, you'll create an account. This account is used to manage your Vercel resources. Vercel has three types of plans:

- [Hobby](/docs/plans/hobby)

- [Pro](/docs/plans/pro)

- [Enterprise](/docs/plans/enterprise)

Each plan offers different features and resources, allowing you to choose the right plan for your needs.

When signing up for Vercel, you can choose to sign up with an email address or a Git provider.

## Sign up with email

To sign up with email:

1. Enter your email address to receive the six-digit one-time password (OTP)

2. Enter the OTP to proceed with logging in successfully.

When signing up with your email, no Git provider will be connected by default. See [login methods and connections](#login-methods-and-connections) for information on how to connect a Git provider. If no Git provider is connected, you will be asked to verify your account on every login attempt.

## Sign up with a Git provider

You can sign up with any of the following supported Git providers:

- [**GitHub**](/docs/git/vercel-for-github)

- [**GitLab**](/docs/git/vercel-for-gitlab)

- [**Bitbucket**](/docs/git/vercel-for-bitbucket)

Authorize Vercel to access your Git provider account. **This will be the default login connection on your account**.

Once signed up you can manage your login connections in the [authentication section](/account/authentication) of your dashboard.

Why it works

The file reads less like a sitemap and more like a curated menu. It’s optimized for discovery, not completeness.

When to copy this pattern

If your product portfolio is expanding and developers often discover you through AI tools, organize llms.txt around products—not internal doc categories.

3. Stripe: Making a large API surface legible to models

Stripe’s documentation spans payments, billing, fraud, issuing, and more. Their llms.txt shows how to make a sprawling API ecosystem understandable. Models need to guide developers toward the right part of Stripe’s API without enumerating hundreds of endpoints.

Their structure

Stripe organizes llms.txt around major product and resource areas—Payments, Checkout, Webhooks, Testing—mirroring their API architecture.

# Stripe Documentation

## Docs

- [Testing](https://docs.stripe.com/testing.md): Simulate payments to test your integration.

- [API Reference](https://docs.stripe.com/api.md)

- [Webhooks](https://docs.stripe.com/webhooks.md): Listen for events from Stripe

## Payment Methods

- [Payment Methods API](https://docs.stripe.com/payments/payment-methods.md): Learn more about the API

- [Bank Debits](https://docs.stripe.com/payments/bank-debits.md)

- [How cards work](https://docs.stripe.com/payments/cards/overview.md)

## Checkout

- [Use a prebuilt Stripe-hosted payment page](https://docs.stripe.com/payments/checkout.md)

- [Customize Checkout](https://docs.stripe.com/payments/checkout/customization.md)

Each section contains a small number of curated links with descriptive text, not just titles.

Why it works

The structure teaches models how Stripe thinks about its own platform. That makes it easier for AI tools to recommend correct starting points instead of generic answers.

When to copy this pattern

If your docs are API‑centric, mirror your resource hierarchy and surface stable entry points rather than exhaustive references.

4. AI dev tools (Cursor, Windsurf, Bolt): Workflow‑first documentation

AI‑first developer tools are often consumed entirely through assistants and IDEs. Their llms.txt files reflect that reality. When a model is embedded in the workflow, it needs to answer questions in the moment, from setup and configuration to troubleshooting.

Their structure

All three tools structure their llms.txt files around what developers need to accomplish, but they emphasize different aspects of the development lifecycle:

- Cursor leads with AI agent capabilities such as Agent modes, Background Agents, CLI tools, reflecting their core value proposition of AI-powered coding assistance. Configuration and context management follow, supporting the "how do I customize my AI coding experience?" queries.

# Cursor

## Docs

- [Agent Security](https://docs.cursor.com/en/account/agent-security.md): Security considerations for using Cursor Agent

- [Billing](https://docs.cursor.com/en/account/billing.md): Managing Cursor subscriptions, refunds, and invoices

- [Pricing](https://docs.cursor.com/en/account/pricing.md): Cursor's plans and their pricing

- [Admin API](https://docs.cursor.com/en/account/teams/admin-api.md): Access team metrics, usage data, and spending information via API

- [AI Code Tracking API](https://docs.cursor.com/en/account/teams/ai-code-tracking-api.md): Access AI-generated code analytics for your team's repositories

- [Analytics](https://docs.cursor.com/en/account/teams/analytics.md): Track team usage and activity metrics

- [Analytics V2](https://docs.cursor.com/en/account/teams/analyticsV2.md): Advanced team usage and activity metrics tracking

- [Dashboard](https://docs.cursor.com/en/account/teams/dashboard.md): Manage billing, usage, and team settings from your dashboard

- [Enterprise Settings](https://docs.cursor.com/en/account/teams/enterprise-settings.md): Centrally manage Cursor settings for your organization

- [Members & Roles](https://docs.cursor.com/en/account/teams/members.md): Manage team members and roles

- [SCIM](https://docs.cursor.com/en/account/teams/scim.md): Set up SCIM provisioning for automated user and group management

- [Get Started](https://docs.cursor.com/en/account/teams/setup.md): Create

- Windsurf prioritizes workflow optimization features: Autocomplete, Chat, Command, and Context Awareness sit at the top. Their emphasis on "Best Practices" and "Prompt Engineering" signals a focus on helping developers extract maximum value from AI tooling.

- Bolt follows the product lifecycle: account & subscription, building, cloud, integrations, and troubleshooting. This mirrors a developer's journey from "getting started" to "deploying and scaling," reflecting Bolt's position as an end-to-end platform.

While each tool emphasizes different features, they share common traits:

- Quickstarts appear immediately

- Integration docs are prominent

- Troubleshooting has dedicated sections

Why it works

The llms.txt organization answers the implicit question “what does a developer need to do right now?”

When to copy this pattern

If your product is used inside IDEs or AI agents, build llms.txt around workflows.

5. Infrastructure platforms (Cloudflare, Supabase): Solving the orientation problem

When you're building on infrastructure platforms, you often compose solutions from multiple products. AI tools need to understand both those aspects.

Their structure

Cloudflare and Supabase structure their llms.txt to solve the "I'm lost in your ecosystem" problem, but they take different routes:

- Cloudflare organizes by product vertical with substantial depth. Each section (Agents, AI Gateway, Workers, Pages, R2, etc.) includes Getting Started, Configuration, API Reference, and Tutorials. The file runs extensively, with thousands of lines covering 20+ products. This structure makes sense because the grounding question is "which Cloudflare product solves my problem, and how can I link it with another product that i’m using?" not "where is this specific doc?"

# Cloudflare Developer Documentation Easily build and deploy full-stack applications everywhere...

## Agents

- [Build Agents on Cloudflare](https://developers.cloudflare.com/agents/index.md)

- [Patterns](https://developers.cloudflare.com/agents/patterns/index.md)

- [Agent class internals](https://developers.cloudflare.com/agents/concepts/agent-class/index.md)

- [Human in the Loop](https://developers.cloudflare.com/agents/concepts/human-in-the-loop/index.md)

- [Calling LLMs](https://developers.cloudflare.com/agents/concepts/calling-llms/index.md)

...

## AI Gateway

- [AI Assistant](https://developers.cloudflare.com/ai-gateway/ai/index.md)

- [REST API reference](https://developers.cloudflare.com/ai-gateway/api-reference/index.md)

- Supabase takes a language-segmented approach. Rather than one comprehensive file, they offer separate exports by framework: JavaScript, Dart, Swift, Kotlin, Python, C#, plus CLI reference. This solves the orientation problem differently: "show me only what's relevant to my stack" rather than "show me everything you offer."

Why it works

For a platform with dozens of independent features, a comprehensive index prevents models from missing entire areas of the product. Depth is handled elsewhere, rather than forcing everything into a single file.

When to copy this pattern

If you offer many composable products, llms.txt should solve orientation first—either by product sequence or by developer stack.

6. LangGraph: Documenting the documentation strategy

LangGraph is worth highlighting separately because it documented its strategy publicly. Their llm.txt docs are designed to be embedded into IDEs and agent systems that need large, structured inputs.

Their structure

- A slim llms.txt index containing links with brief descriptions of the content.

- A comprehensive llms‑full.txt export including all the detailed content directly in a single file, eliminating the need for additional navigation.

- llms.txt and llms‑full.txt for each programming language

# LangGraph

## Quickstart

These guides are designed to help you get started with LangGraph.

- [LangGraph Quickstart](https://langchain-ai.github.io/langgraphjs/tutorials/quickstart/): Build a chatbot that can use tools and keep track of conversation history. Add human-in-the-loop capabilities and explore how time-travel works.

- [Common Workflows](https://langchain-ai.github.io/langgraphjs/tutorials/workflows/): Overview of the most common workflows using LLMs implemented with LangGraph.

- [LangGraph Server Quickstart](https://langchain-ai.github.io/langgraphjs/tutorials/langgraph-platform/local-server/): Launch a LangGraph server locally and interact with it using REST API and LangGraph Studio Web UI.

- [Deploy with LangGraph Cloud Quickstart](https://langchain-ai.github.io/langgraphjs/cloud/quick_start/): Deploy a LangGraph app using LangGraph Cloud.

## Concepts

Why it works

LangGraph recognized that IDE integrations need a different documentation structure than real-time chat tools. The full export is optimized for:

- Chunking: Breaking docs into semantic sections that can be indexed separately

- Retrieval: Including enough context in each section that it's useful in isolation

- Versioning: Clear signals about which version of the library the docs correspond to

They also provide usage guidance for tool builders, explaining how to parse and consume their llms-full.txt effectively, depending on the programming language.

When to copy this pattern

If you're building developer tools or have docs that get embedded in IDEs, study LangGraph's approach. The "index + full export + usage documentation" pattern is the gold standard.

7. Mintlify: what we learned implementing llms.txt ourselves

When you build AI-native documentation tools, your own docs become both a product demo and a proof of concept. Our llms.txt implementation reveals what we prioritize when we're designing for both human readers and the AI tools reading on their behalf.

Our structure

- Product-first, but includes "How it works" technical detail and descriptive texts

- Separate sections for different user types (developers using Mintlify vs. readers of Mintlify-powered docs)

- Links to specific features rather than a generic "Documentation" page

# Mintlify

## Docs

- [AI-native documentation](https://mintlify.com/docs/ai-native.md): Learn how AI enhances reading, writing, and discovering your documentation

- [Agent](https://mintlify.com/docs/ai/agent.md): Automate documentation updates with the agent. Create updates from Slack messages, PRs, or API calls.

- [Assistant](https://mintlify.com/docs/ai/assistant.md): Add AI-powered chat to your docs that answers questions, cites sources, and generates code examples.

- [Contextual menu](https://mintlify.com/docs/ai/contextual-menu.md): Add one-click AI integrations to your docs.

- [Discord bot](https://mintlify.com/docs/ai/discord.md): Add a bot to your Discord server that answers questions based on your documentation.

- [llms.txt](https://mintlify.com/docs/ai/llmstxt.md): Optimize your docs for LLMs to read and index.

- [Markdown export](https://mintlify.com/docs/ai/markdown-export.md): Quickly get Markdown versions of pages for AI tools and integrations.

- [Model Context Protocol](https://mintlify.com/docs/ai/model-context-protocol.md): Connect your documentation and API endpoints to AI tools with a hosted MCP server.

- [Slack bot](https://mintlify.com/docs/ai/slack-bot.md): Add a bot to your Slack workspace that answers questions based on your documentation.

If you're building documentation with Mintlify, consider how your own llms.txt should be structured:

- Organize by user journey, not site hierarchy. Your navigation might be alphabetical or feature-grouped, but your llms.txt should reflect how users actually accomplish goals

- Prioritize by frequency, not importance. Put the docs that answer 80% of questions in the first 20% of your file

- Segment by role when relevant. If you serve developers, product managers, and end users, consider separate sections or even separate files

Takeaway: Your llms.txt is a strategic asset. Structure it to answer the question: "What does my user need to accomplish right now?"

Patterns and best practices across all llm.txt examples

Across these llms.txt examples, a handful of repeatable patterns emerge that you can apply regardless of company size or stack.

"Catalog" pattern (Stripe, Cloudflare)

- Group by product/feature

- Keep sections to a small number of high-value links (as shown in llmstxt.studio's Stripe analysis and directory examples)

- Each link gets descriptive text (not "API Reference" but "Payments API: Charges and Payment Intents")

- Section intros provide one-sentence context

When to use: Multi-product platforms, extensive API surfaces, infrastructure companies

"Focused workflow" pattern (Cursor, Windsurf, Bolt.new)

- Spotlight workflows, setup, and troubleshooting docs tied to in-product usage

- Organize around in-product workflows, not documentation taxonomy

- Keep total token count low—only essential docs

When to use: Developer tools, IDE integrations, AI-native products

"Index + export" pattern (Anthropic, Vercel, LangGraph)

- Slim llms.txt index plus llms-full.txt for ingestion (explained in LangGraph's overview and Vercel's implementation)

- Index serves real-time AI assistants (ChatGPT, Claude)

- Full export serves ingestion pipelines (IDE indexing, RAG systems)

When to use: Dense documentation, AI/ML products, tools with IDE integrations

A starter llms.txt template

Based on everything we’ve discussed, here's a starter template modeled on the "catalog" pattern.

# YourProduct

> YourProduct helps developers [one-sentence value prop]

## Getting Started

Quick paths to first success

- [5-Minute Quickstart](link-to-quickstart.md)

- [Installation Guide](link-to-install.md)

- [Hello World Tutorial](link-to-tutorial.md)

## Core Concepts

Fundamental ideas to understand how YourProduct works

- [Architecture Overview](link)

- [Key Terminology](link)

- [How Authentication Works](link)

## Guides

Common use cases and workflows

- [Deploying to Production](link)

- [Integrating with GitHub](link)

- [Monitoring and Debugging](link)

## API Reference

- [REST API Documentation](link)

- [SDK Reference](link)

- [Webhooks Guide](link)

## Resources

- [Migration Guide from v1 to v2](link)

- [Troubleshooting Common Issues](link)

- [Community and Support](link)

Start small. Ship a first version. Then refine it based on how AI tools actually surface your docs.

Ready to implement llms.txt?

Your llms.txt acts as a priority map for AI tools, so make sure it points them (and your users) in the right direction.

For Mintlify users: We generate and maintain llms.txt automatically from your existing documentation. For everyone else: Our generator can create a starter file based on your site structure, or you can follow this implementation guide.

More blog posts to read

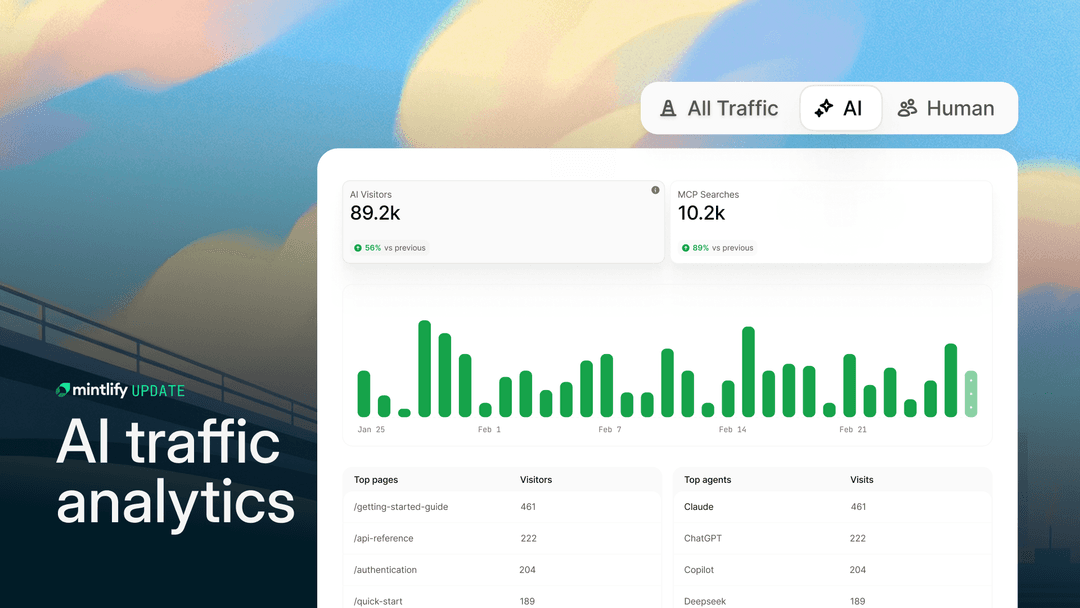

Analytics for AI and agent traffic

See how AI agents use your documentation and why building docs for agent consumption is becoming essential.

February 2, 2026Peri Langlois

Head of Product Marketing

A better way to edit and publish in Mintlify

A new web editor that brings publishing, editing, and previewing into one workflow for anyone on your team.

January 30, 2026Peri Langlois

Head of Product Marketing

Peri Langlois

Head of Product Marketing